Fantastic eyes

Why you see less than a robot

by Randall van Poelvoorde | Sept 24, 2021 | Developments

We humans naturally think that we can see a lot with our eyes. This is actually not true. In particular, there is a lot we cannot see. Together with Paul Voerman, Head of Innovations at TRICAS, I explain in the article below where we stand and how robots can help us with their fantastic eyes.

We first cover the technical side and then go on to give a few examples of the usefulness of robot eyes.

Resolution

Robots can see at a much higher resolution than we can. From your eye, two million nerves transmit the image that falls onto your retina to your brain. If you were to compare nerves to pixels, the eye has two million pixels. This means that a good camera on a phone has ten times that at 20 million pixels. But that’s just the beginning, because if you were to use a robot this could go up much further. Exponentially, you know.

Contrast

Our computer color spectrum is usually divided into 16.5 million colors. With difficulty, we as humans can see 5 points of difference. As impressive as this might seem, a robot is able to distinguish much smaller differences. The color differences between the spheres below are hard for us to distinguish, but are no match for a robot eye.

A robot eye does not allow itself to be seduced by the brain’s own interpretation. Humans interpret images continuously and that, unfortunately, reduces the objectivity of our view of reality.

Speed

Measuring the speed of the human eye in frames per second is technically impossible. What matters is whether you see something (spinning wheel) and how well you see it (every spoke of the wheel). A camera is obviously much better equipped than the human eye. To make nice slow motion recordings, a frame rate of 960 is currently quite common. This pales in comparison to the 100,000 frames per second of specialized cameras for super slow motion.

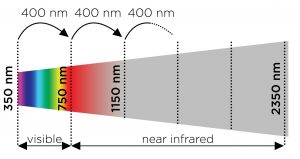

A piece in the electromagnetic spectrum

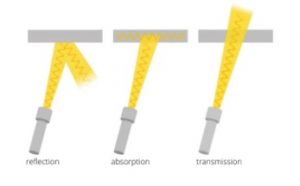

On the spectrum of near-infrared, our eyes are out of focus. Take a look at the picture above and you’ll see how much we (our eyes) are actually missing! In fact, light behaves no differently in this part of the spectrum than it does in the spectrum visible to us. Reflection, absorption and transmittance are at play here too. Properties that are not visible to us become visible with near-infrared.

Applications

We can fortunately equip robots and other machines with cameras that can see very well, quickly and sharply. So what could they see that would be of use to us? Below is a tip of the iceberg. There are thousands of applications.

– Unwanted pollution / mold on our food. That seems superb! Your robot pointing out the freshest food in the store. Much less chance of food poisoning. That little dot you don’t see is clearly present with near-infrared. But also the color of food contains much more information for the robot than for us.

– Irregularities on the skin of humans and animals. These too a robot can see and we cannot. Think of skin cancer. Detecting this early greatly increases survival rate! Any mirror with a good camera in it could check you and your family daily without hassle.

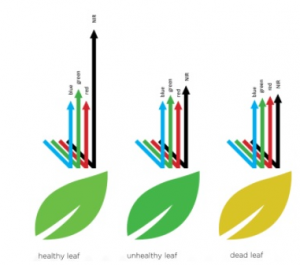

– Crop health. From a drone, you can quickly see which crops are healthy or sick. But the drone also sees exactly which crops are planted on a field through near-infrared.

– Different plastics that look the same to us. If you rely on people to recycle plastics, different types of plastics will end up on the same heap. This is highly undesirable in a circular economy.

The above mentioned examples are purely for illustration purposes and to incite you to think about the possibilities.

‘Disruptive technology strategist’ for your keynotes and specific workshops

Randall can also be booked as a day chairman for innovation days and conferences.